Evolution of Biomarkers in US Healthcare

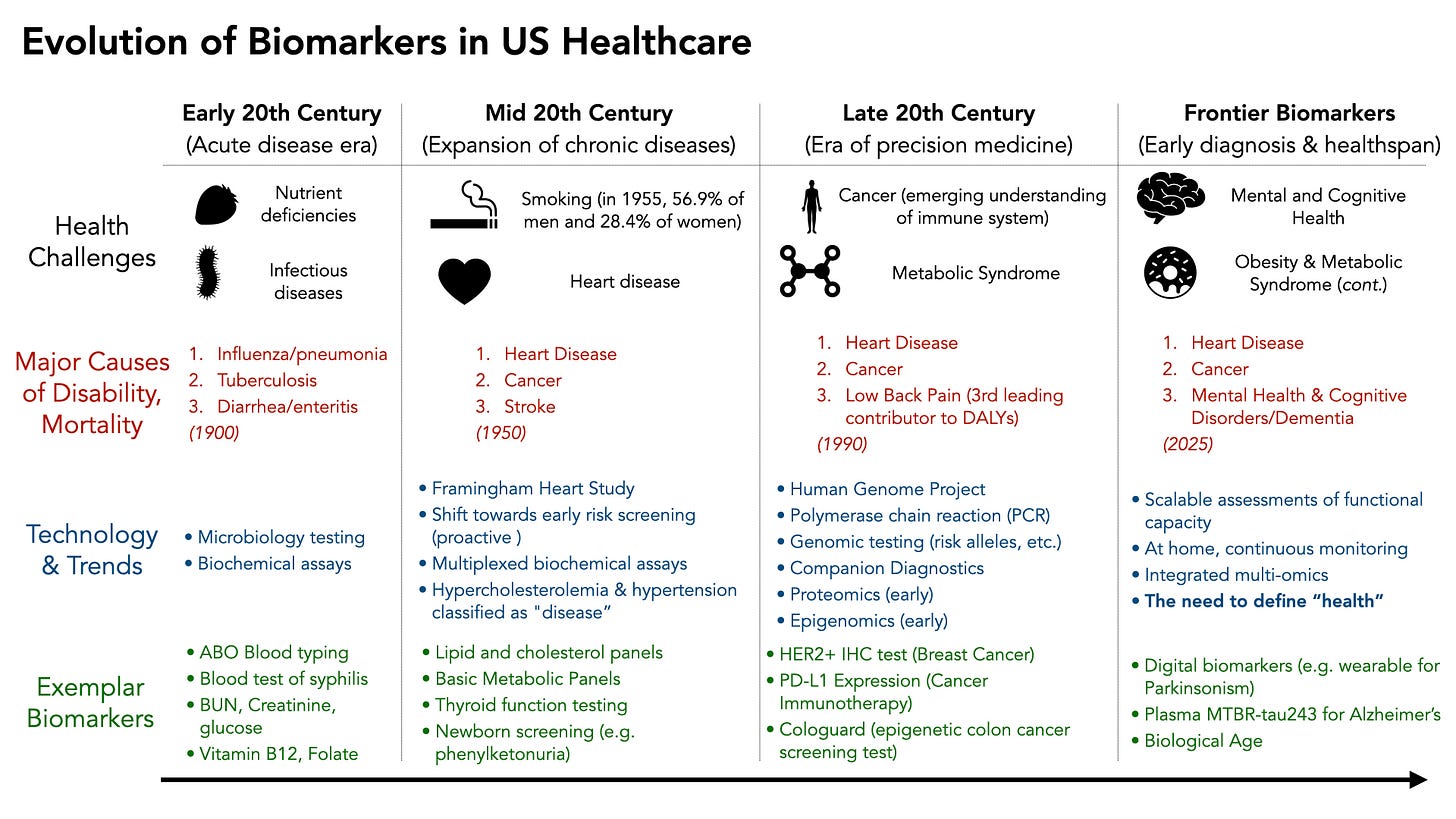

From measuring infectious disease and acute markers of illness, to precision medicine, to defining healthspan, biomarker development and deployment has followed the pressing needs of population health

“Man is the measure of all things” - Protagoras (ca. 485-410 BC)

Humans have long been interested in measuring things. It’s no surprise that one of humankind’s favorite things to measure is humans themselves.

Biomarkers are measurable indicators of different biological states, ranging from simple vital signs to more complex physiological or molecular signatures. In the context of human health, they serve roles in diagnosing disease, predicting mortality risk, directing therapies, and determining one’s exercise capacity. Throughout the last century biomarker discovery, development, and deployment have evolved in response to both technological developments and the needs of society at the time.

In the early 1900s, acute diseases like infection, trauma, and nutrient deficiencies necessitated methods of infectious disease and vitamin deficiency detection. As chronic diseases dominated in the mid to late 1900s, emphasis shifted towards quantifying risk of heart disease, diabetes, cancer, etc. With the advent of computational biology, molecular-level population analyses (like the human genome project) and multi-omics methodologies precision medicine became possible with much more sophisticated biomarkers.

Moving beyond the “absence of disease” definition of health

While the last 100+ years has gotten the US much more capable of diagnosing disease, the payoff in healthspan has diminished.

This is in part because in all of the advances in the diagnosis of disease vs. “normal,” health has never been truly defined.

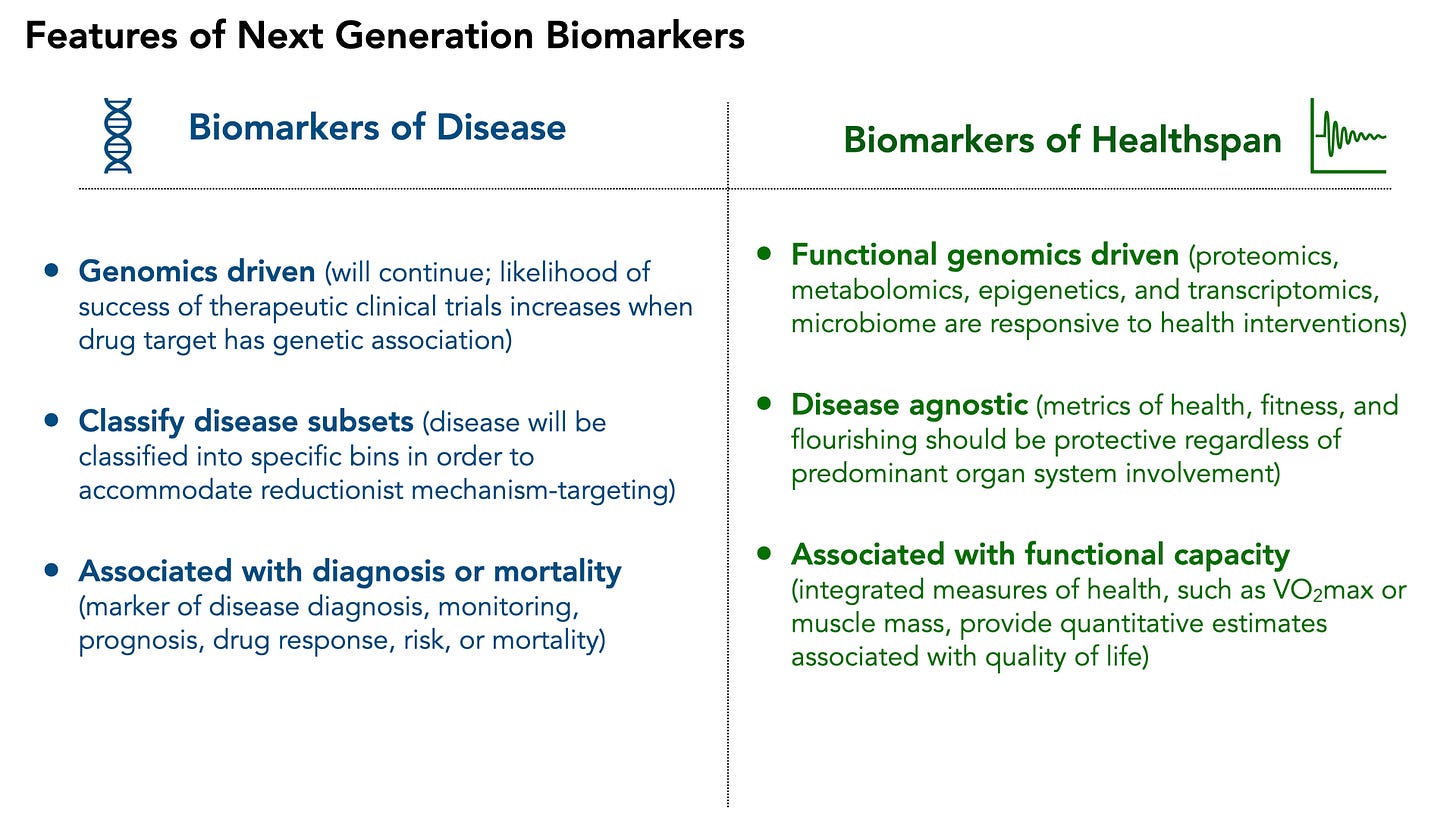

In this article I will briefly describe trends in biomarker development and deployment in the US Healthcare system, and suggest that for healthspan to improve, there needs to be a partitioning of biomarker development:

The natural evolution of precision medicine biomarkers is to continue to molecularly define disease subsets.

There is a glaring unmet need for biomarkers of healthspan to be defined. Clearly, defining health as the “absence of disease” has diminishing returns— there is no North star for achieving health and quality of life.

I have previously written on what makes a good biomarker and potential functional biomarkers that could redefine “health”. I would love to hear your thoughts to help refine a collective vision of healthspan.

Early 20th Century: The Era of Acute Diseases

Much of the modern US healthcare infrastructure began in the early 1900s, when the dominant health challenges were related to preventing death from acute diseases. Infectious diseases, trauma from war (and the need for safe blood transfusions), and nutritional deficiencies (e.g. scurvy) were leading causes of illness and death. This necessitated innovation in detecting microbial insults, screening blood for incompatibilities, and early biochemical assays of disease status. Several key innovations occurred:

Direct measurement of pathogens for diagnosis and prevention

Malaria parasite blood smears in the 1890s

First serologic blood test for syphilis in 1906 – the Wassermann reaction – had been developed, enabling diagnosis of this “social disease” via antibody response

The identification of ABO human blood groups in 1901 (Karl Landsteiner)

These became one of the first widely implemented “biomarkers” in hospitals and eventually in blood banks

This was driven by an acute need to support lifesaving surgeries and injuries in the wars of the early 1900s

Innovations in biochemical assays

The quantitation of blood glucose in the 1910s marked the beginning of a transition from physicians relying on tasting sweet urine to diagnose diabetes, to using quantitative assays for glycemic control.

Nutritional and vitamin deficiencies could be defined and measured

Urea, creatinine, and uric acid for kidney function were also defined, to diagnose kidney disease prior to severe side effects

In 1900, life expectancy in the United States was 46 years for men and 48 years for women. By 1950, it had jumped to 65.6 years for men and 71.1 years for women.

With the ability to mitigate acute illnesses, and a massive jump in life expectancy, the needs of healthcare changed significantly.

Mid 20th Century: Chronic Diseases Emerge

As Americans began to live longer, the predominant health concerns became chronic diseases like heart disease, cancer, stroke, and diabetes. The solution at the time was to be able to diagnose and define each of these diseases objectively, and ideally as early as possible. The concept of a biomarker as an indicator of future disease risk emerged, as many of these diseases are asymptomatic until very late.

Heart disease for the first time topped the list as the major killer in the US. This prompted the launch of the Framingham Heart Study in 1948, allowing for longitudinal monitoring of heart disease risk factors (in a proactive fashion). Key biomarkers that served as “risk factors” for heart disease included elevated cholesterol, high blood pressure, and ECG abnormalities.

This led to the classification of hypertension and hyperlipidemia as independent, treatable “diseases.” As the concept of asymptomatic population screening emerged, technology adapted. Multi-test “panels” were developed, which allowed physicians to have a standardized set of biomarkers of disease risk. This still dominates the practice of medicine today and include

Electrolyte panels

Lipid panels (HDL, LDL, triglycerides)

Kidney function panels

Liver enzymes

Endocrinology also saw a big increase in biomarker development, with markers like hemoglobin A1C (an integrated measure of long term glycemic control), thyroid function tests (T4, T3, and TSH), and reproductive hormones, cortisol, and insulin (though the latter are still measured mostly in specialty settings today).

In general, there began the movement of biomarkers that enabled early diagnosis and early intervention (regardless of symptoms). While diseases were better classified, some chronic diseases saw fewer early deaths, it has been more difficult to capture the effects of early diagnosis on healthspan.

Late 20th Century: Technological Evolution of Disease Classification

The late 20th century and the early 2000s marked a period of intense technological innovation, beginning the the human genome project, innovations in mass spectrometry, and systems biology. There was an increasingly large aging population enabled by the biomarkers and interventions developed earlier in the century. Diseases related to obesity, metabolic syndrome, dementia, and mental health then dominated society. Innovation was largely driven by the new technologies available (genome sequencing, proteomics), and less because it directly addressed the needs patients living was chronic disease.

The largest beneficiary of the Personalized Medicine movement enabled by the sequencing of the human genome has largely been the field of cancer diagnosis and treatment. The concept of companion diagnostics emerged, where treatments could be targeted towards a very specific molecular biomarker. A prime example was the approval of trastuzumab (Herceptin) for HER2-positive breast cancer in 1998, which coincided with the first FDA-approved companion diagnostic test (the HercepTest immunohistochemistry assay for HER2 overexpression). In fact, the vast majority of the 188 FDA approved companion diagnostics are for cancer indications.

Interestingly, we are increasingly seeing objective metrics of complex diseases, like Parkinson’s and Alzheimers, such as the recently published MTBR-Tau243 blood test that correlates with AD disease severity in a dose response fashion. However, early trials aimed at targeting asymptomatic Alzheimer’s disease with anti-amyloid antibodies have demonstrated significant side effects with very little benefit so far.

While the US adopted many more sophisticated biomarkers, the return on healthspan has been minimal. In 2022, life expectancy at birth was 77.5 years for the total U.S. population. Though an imperfect measure of health, we’re not much further ahead than 72 years ago.

To both take advantage of novel technologies that can better diagnose and classify disease AND increase population health, there needs to be a shift in the evolution of biomarker development.

The Next Era of Health & Disease Biomarkers

I believe that natural evolution of biomarker development will diverge into two key classifications: biomarkers of disease (traditional approach, novel techniques) and biomarkers of healthspan (novel approach, new frontier)

Biomarkers of Disease will continue to be dominated by genetic underpinnings. Because drug development strongly emphasizes genetic support (there is evidence that genetic validation of a drug target increases clinical trial success), this is unlikely to change soon. However, the further classification of disease into molecular subsets will not rely on genetics alone. Proteomic biomarkers, especially those measurable through minimally invasive methods, are already emerging as secondary endpoints in Parkinson’s and Alzheimer’s diseases—and might eventually serve as surrogate endpoints, similar to Amyloid PET scans. Necessarily, within the disease-focused healthcare model, biomarkers will remain strongly linked to the diagnosis, prognosis, and mortality associated with a given disease.

Biomarkers of Healthspan are much less likely to be genomic. Although genetic associations with athletic performance have been identified, genes themselves are not responsive to interventions (except gene editing—a topic for another time). For guiding healthspan programs, functional genomics (e.g. RNA, proteins, metabolomics, epigenetics) are much more likely to play a key role given their responsiveness to environmental, lifestyle, and therapeutic interventions. Furthermore, these biomarkers will be disease-agnostic, shifting the definition of health away from merely the absence of disease toward functional capacity and quality of life. Measures of functional capacity (like VO₂max or insulin sensitivity) predict not only quality of life and resilience but also mortality risk.

Excitingly, we will increasingly see biomarkers measured at home, or on a person’s own time, enabled by wearable technology, increasing consumer health options, and eventual AI-enabled efficiency of health systems.

The Future is Healthspan

Without clearly defining health, quality of life will always be secondary to an intervention’s ability to produce measurable changes in biomarkers.

I envision a future where healthspan biomarkers, supported by sufficient real-world evidence, become the primary metrics for measuring population health. As individuals begin to show early signs of disease—identified through traditional metrics measured infrequently or in response to symptoms—they will transition into precision medicine approaches for disease management. The ultimate goal will not necessarily be to keep individuals within a “normal” range and outside of a “disease” range; rather, it will be to align lifestyle and medical interventions with objective measures of healthspan.

Super interesting article, Brooks. Thanks for sharing! I look forward to reading it more fully later this evening when I am not just procrastinating.

Have a great Monday!

Biomarkers to support increasing Healthcare is where we need to go. However, there needs to be major shifts in the perspectives of the different Healthcare stakeholders to accept treatment or other measures to increase Healthcare.

This biggest challenge will be the studies that generate the evidence to convince regulators.

This is a a good example of what I call a fundable frontier. Achieving such a shift is going to require big projects, consortium projects.